MemVerge has launched an open source MemMachine software project to provide a cross-platform and long-context memory layer for large language models (LLM) and agentic AI.

MemVerge provides Memory Machine software to virtualize DRAM, combining a server CPU’s memory with an external memory tier. It enables data to be loaded into their own local over-burdened memory capacity. It claims the MemMachine software, with an associated enterprise offering, will deliver the world’s most accurate AI Memory system with fast recall and a foundation for human-like memory in machines.

Charles Fan, MemVerge co-founder and CEO, stated: “AI without memory is incomplete. MemMachine delivers the memory layer that makes AI agents truly intelligent, personal, and enterprise-ready. This is the beginning of the next generation of agentic AI, and we are proud to deliver the world’s most powerful AI memory system.”

MemVerge waxes lyrical about its new software, saying: “The long-term vision for MemMachine is to parallel – and surpass – the richness of human memory. Like people, it will enable agents to retain episodic experiences, semantic knowledge, and procedural skills. Unlike people, it will deliver limitless recall, instant context retrieval, perfect fidelity, and secure sharing across agents, applications, and enterprises. The result: AI that acts as a true collaborator, remembering everything important, forgetting nothing critical, and scaling far beyond biological limits.”

MemMachine is not another KV cache offload engine, MemVerge is claiming it is “providing a persistent, intelligent memory layer that retains episodic, personal, and procedural knowledge across sessions, models, agents, and environments. The result: assistants that evolve from disposable chatbots into trusted, context-aware collaborators.”

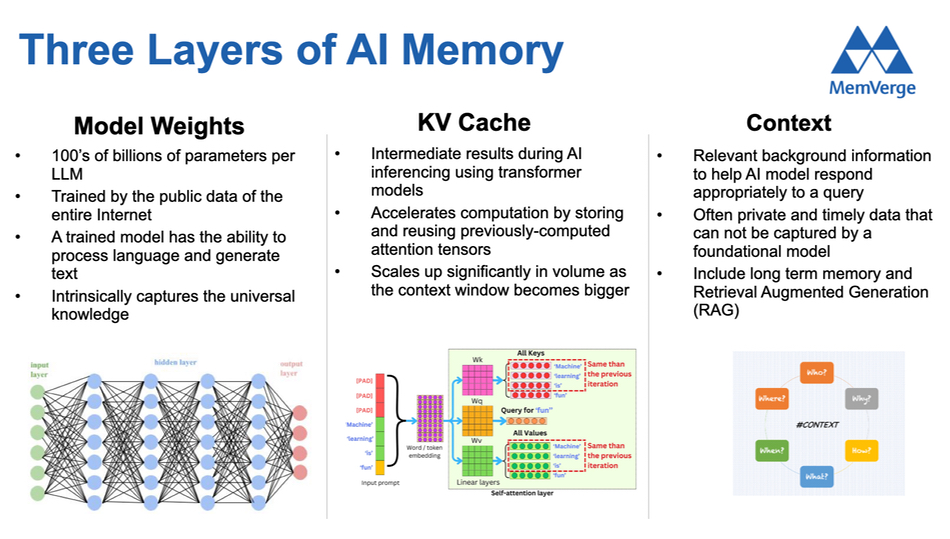

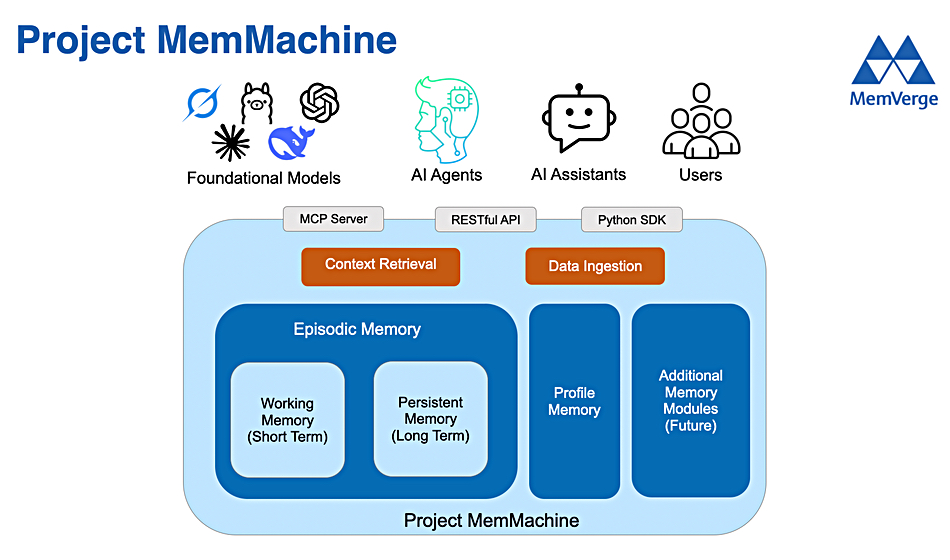

A chart illustrates this additional memory layer concept:

The model weights are intrinsic to an LLM agent while the KV cache is a run-time memory. Context is the MemMachine area, with Fan writing in a blog (with his italics and bold text): “When I say memory, I don’t mean a long prompt or a vector store bolted onto a chatbot. I mean a cross-model, multi-agent, policy-aware, low-latency memory layer that captures, organizes, and retrieves knowledge with intent. Concretely, that layer should support four complementary modes:

- Episodic memory – “What happened?” Persistent records of past interactions and outcomes, time-stamped and traceable.

- Semantic memory – “What does it mean?” Concepts, entities, and relationships distilled from raw data.

- Procedural memory – “How do we do it?” Steps, playbooks, and skills that agents can reuse and adapt.

- Profile memory – “Who am I working with?” Durable knowledge of user identities, roles, preferences, and constraints, enabling personalization and continuity.

“Enterprises need all four. Episodic for continuity, semantic for understanding, procedural for action, and profile for personalization. Together they transform assistants from single-turn tools into reliable, context-aware collaborators.”

He adds: “For [AI] memory to be truly enterprise-grade, it must perform with the same rigor as other core infrastructure. It must deliver retrieval speeds fast enough to keep GPUs fully utilized, but just as importantly, it must be accurate and relevant – returning the right piece of context at the right time. It should be secure by design, with encryption, fine-grained access, and full auditability. It should work seamlessly across different clouds and models, avoiding vendor lock-in, and it must come with the observability, quotas, and service-level guarantees that enterprises expect from production systems. Memory is not a toy or a demo feature. It is infrastructure, and it must behave accordingly.”

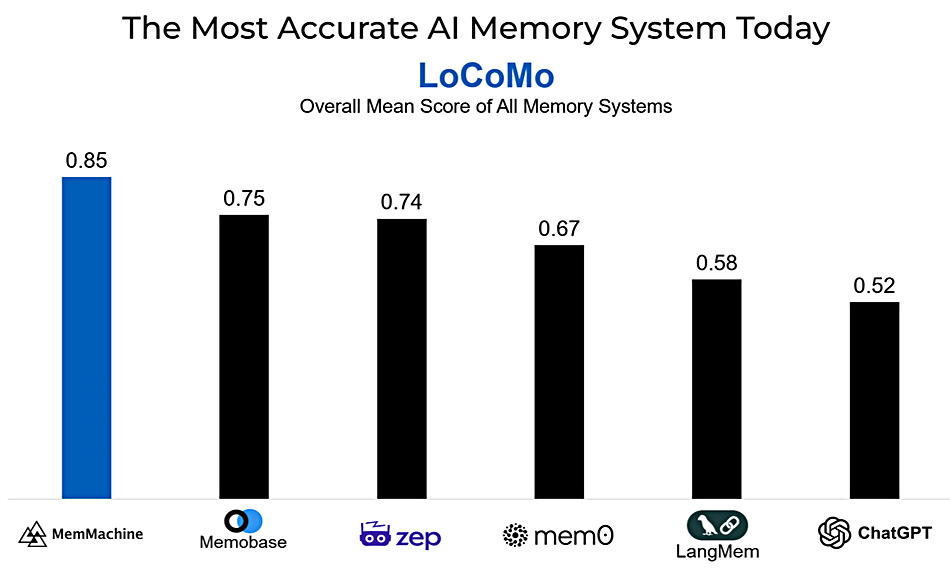

MemVerge has benchmarked MemMachine against ChatGPT, zep, LangMem and other AI memory systems using the LoCoMo test of long-context memory systems. LoCoMo consists of long conversation data, and a collection of 500 question-answer pairs, and measures the percentage of correct answers. MemMachine led the pack with its 85 percent score:

The MemMachine software features:

- Open Source software with Apache 2.0 license

- Inclusion of Episodic Memory and Profile Memory for users, AI assistants and AI agents

- Support for all foundational LLMs including OpenAI, Claude, Gemini, Grok, and open source models

- Ability to deploy in any environment, cloud or on-prem

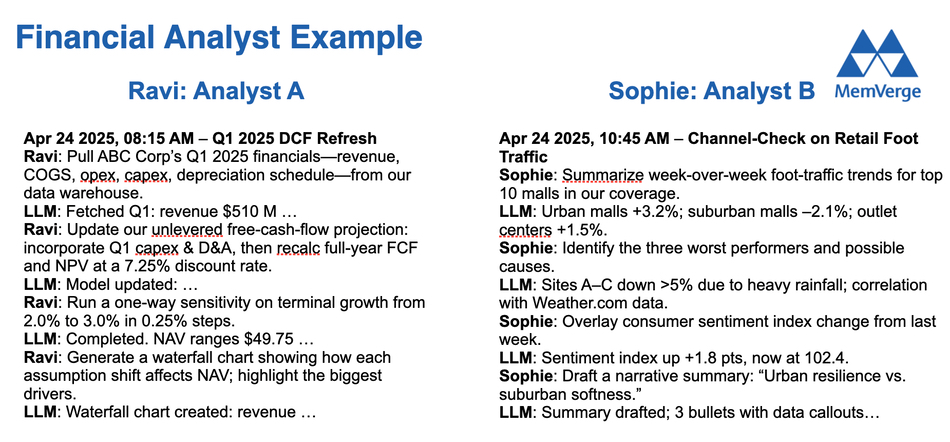

MemVerge identifies several agent examples in its ambitious scheme: benchmark agent, personalized context agent, SlackCRM agent, coding assistant, exec assistant, creative assistant, financial advisor agent and customer support agent. It provided a slide showing interactions with a financial agent:

This certainly looks highly professional, but we think potential investors would want this to be rigorously tested before chancing their dollars, pounds, euros or any other currency.

MemVerge says every enterprise deploying AI agents – in customer service, healthcare, finance, and beyond – will require secure memory infrastructure to enable productivity, personalization, compliance, and trust. Its MemMachine software “enables agents and applications to access, store, and retrieve context with real-time personalization, faster task execution, and fluid orchestration of complex workflows. MemMachine works seamlessly across major LLMs – OpenAI, Claude, Gemini, Grok, Llama, DeepSeek, Qwen and other models, and can be deployed on any cloud or on-prem.“

Fan writes: “Our goal is to make AI memory as fundamental as databases or storage systems – a dependable layer enterprises can standardize on for the decades ahead, and to deliver MemMachine as the most powerful AI memory that is the easiest for the developer to use… AI models will continue to improve, but the durable advantage will come from what your AI knows about your business, how reliably it can recall it, and how safely it can share that knowledge across people, agents, and applications. That is the next frontier – and it’s where we intend to lead.”

The MemVerge commercial MemMachine offerings deliver, it says, enterprise-class scalability, security, and support, with capabilities for compliance, orchestration, observability, and enterprise integration. The open source MemMachine project is available now at www.memmachine.ai, as are MemVerge’s parallel commercial offerings.

Bootnote

A Fortune report says MIT’s NANDA initiative published “The GenAI Divide: State of AI in Business 2025” study, which shows that “about 5 percent of AI pilot programs achieve rapid revenue acceleration; the vast majority stall, delivering little to no measurable impact on P&L.” Lead author Aditya Challapally told Fortune that the MIT research points to flawed enterprise integration. Generic tools like ChatGPT excel for individuals because of their flexibility, but they stall in enterprise use since they don’t learn from or adapt to workflows. This is the issue that MemVerge wants to solve with its long-context AI Memory MemMachine software.