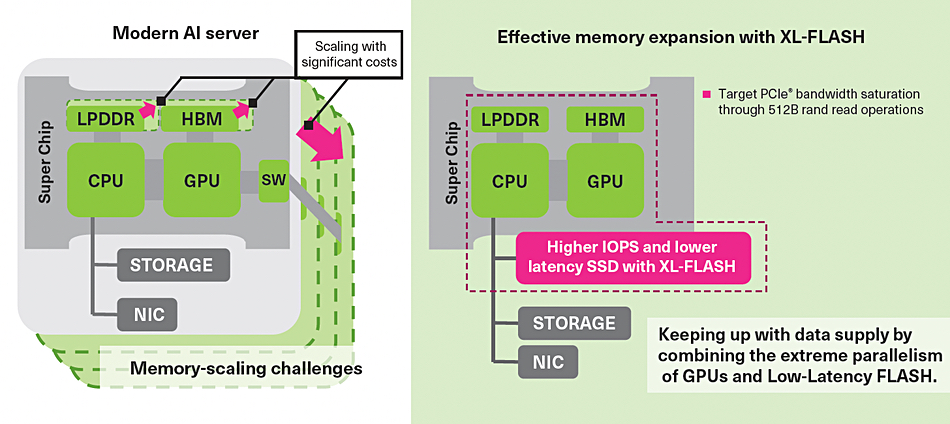

Kioxia is working with Nvidia to build extremely fast AI SSDs to augment high-bandwidth memory (HBM) by being directly connected to GPUs, with 2027 availability.

As reported by Japan’s Nikkei, Koichi Fukuda, chief engineer of Kioxia’s SSD application technology division, presented at an AI market technology briefing. He said Kioxia, at the request of Nvidia, was developing a 100 million IOPS SSD, with two to be directly connected to an Nvidia GPU to provide a total of 200 million IOPS and partially replace HBM for GenAI workloads. The AI SSD will also support PCIe 7.0.

Fukuda said: “We will proceed with development in a way that meets Nvidia’s proposals and requests.” The Nikkei report provides no further technical details, but we can make some educated guesses.

Kioxia has two flash development tracks; normal TLC (3 bits/cell) and QLC (4 bits/cell) 3D-NAND and faster XL-Flash SLC (1 bit/cell) NAND as implemented in its FL6 drive. Kioxia designates this storage-class memory. Kioxia Japan has an FL6 datasheet, dated 2023, saying it uses BiCS4 generation, 96-layer 3D NAND, and delivers 1.5 million/400,000 random read/write IOPS with 6.2 GBps sequential read and write bandwidth from its 16-plane architecture.

Gen 2 XL-Flash was announced in 2022, with both MLC (2bits/cell) mode and SLC supported. It uses BiCS5 112-layer flash and still has a 16-plane architecture, but eight planes could be active simultaneously instead of the four in Gen 1. The technology has “a 128 gigabit (Gb) die for SLC / 256 gigabit (Gb) die for MLC (in a 2-die, 4-die, 8-die package), a 4 KB page size for more efficient operating system reads and writes, fast page read and program times, and a read latency of less than 5 microseconds.”

A mid-2025 InnoGrit N3X SSD reference design used this Gen 2 XL-Flash in SLC mode, with a PCIe Gen 5 x 4 lane controller, to output 3.5 million/700,000 random read/write IOPS and 14/12 GB/s sequential read/write speeds. Its latency was as low as 13 μs read/4 μs write.

A Kioxia-America infographic, dated 2025, presents XL-Flash as a CXL-attached memory extension for GPUs, with average read latency under 10 μs. No IOPS numbers are given but then CXL is a coherent memory load-store mechanism, not a block transfer technology like NVMe. We believe this could indicate that Fukuda may not have been referring to a CXL-attached AI SSD.

Fukuda said the AI SSD would be directly connected to the GPU. He also said it would support PCIe Gen 7, which is four times faster than PCIe 5, and that would indicate a Gen 2 XL-Flash PCIe 7 device could push out 14 million/2.8 million random read/write IOPS. This is a long way short of 100 million IOPS.

Kioxia and Sandisk have a NAND fabrication joint venture and use the same NAND dies. Sandisk is developing an interposer-based, multi-stack, high-bandwidth flash (HBF) technology with SK hynix, the premier supplier of HBM to Nvidia. The aim is to offload expensive HBM with cheaper HBF, built in the same stacked layers connected by TSVs to a logic base layer. This will provide 8 to 16x the HBM capacity for the same cost, and enable evicted GenAI KV cache token data to be stored in HBF and avoid lengthy GPU recomputation of token values.

Kioxia has already revealed a high-bandwidth SSD project with daisy-chained flash beads for edge servers doing AI work. A prototype offers 5 TB of capacity and 64 GB/s across a PCIe 6 bus, using PAM4 technology, and augments the host server’s DRAM.

There is no indication that Kioxia’s Nvidia-requested 200 million IOPS SSD pair will use this daisy chain technology, but we could envisage a PCIe 7 bus version of it.

As noted above, Fukuda also said the AI SSD would be directly connected to the Nvidia GPU, and that implies an interposer link. Let us imagine an HBF implementation using XL-Flash. This would be a multi-layer stack of XL-Flash dies, connected by TSVs to a base logic layer. Eight Gen 2 XL-Flash, PCIe Gen 7 devices could theoretically pump out 112 million IOPS, assuming a linear IOPS increase. Now we are in the right territory.

This gives us three technology avenues for Kioxia’s AI SSD: CXL-connected XL-Flash drives, daisy-chained XL-Flash beads, and an XL-Flash HBF implementation. We have asked Kioxia for clarification and, if and when we hear back, will update this article.

Sandisk intends to deliver first samples of its HBF memory in the first half of 2026 and controllers in early 2027, around the same time that Kioxia’s extremely fast AI SSD could appear.